<Project id="jokr" />

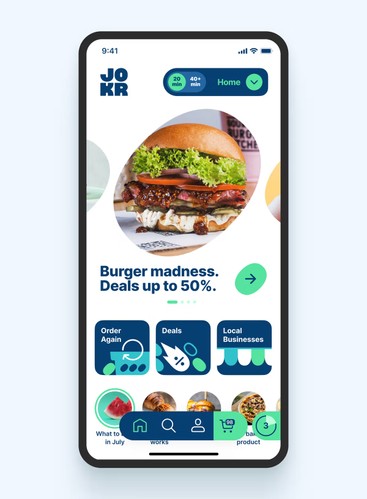

JOKR

Smart grocery shopping app powered by AI

“Although he started as a freelancer, Mohamed integrated seamlessly with our team, delivered full-stack features end-to-end, worked proactively across time zones, and shipped well-tested, reliable code while supporting other engineers.”—Ben Chen

VP of Engineering, JOKR

Project Overview

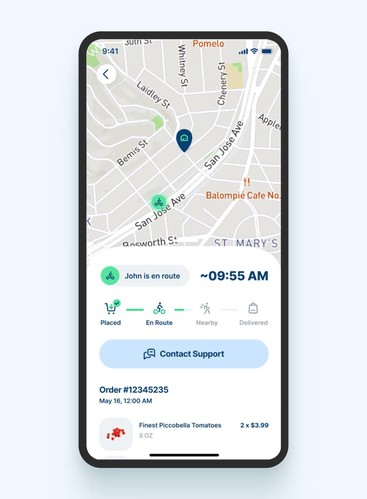

JOKR is an on-demand grocery app that enables quick home deliveries from personalized lists. I led work across backend services and internal dashboards, enabling safe, fast iteration as traffic and catalog complexity grew.

Challenges

- Ship new features quickly across multiple GraphQL services without breaking clients

- Give Ops team reliable tooling for managing catalog, content, and promotions.

- Improve performance, reliability, and developer velocity under growth

What I built

- Federated GraphQL microservices

- Designed schemas/contracts optimized for Apollo Federation.

- Delivered resolvers and data layers with strict typing and validation.

- Standardized schema contracts and versioned releases, reducing regressions.

- Internal dashboards (React + Material UI)

- Built dashboards for Operations to manage entities exposed by the services.

- Built reusable packages/component libraries to standardize quality and shorten delivery cycles.

- Added feature flags and audit logs to keep changes traceable.

- CI/CD and release hygiene

- PR preview environments for services and dashboards.

- Automated unit/E2E tests (Jest, Cypress) as required PR checks.

- Docker/Kubernetes/Helm deploys to staging/production via GitHub Actions and Terraform.

- Slimmer images and parallelized steps to shorten deploy time.

- Observability and reliability

- Structured logging, metrics, and traces in Datadog with actionable alerts.

- Incident runbooks that lowered MTTR and improved on-call confidence.

- Hardened input validation and data access and Auth0/JWT scope enforcement.

- Performance & scale

- Sustained thousands of req/min at low p95 latency with DataLoader batching, caching, and efficient pagination.

- Load testing to validate capacity and catch regressions before release.

- Optimized media delivery with Cloudflare to cut payload size and improve render time.

- AI-powered product recommendations

- Integrated a recommendations service using signals (order history, category affinity, interests).

- Surfaced in app and controllable from dashboards.

- Added guardrails (fallbacks, caps, telemetry) to keep recs safe and measurable.

Impact

- Sustained thousands of requests/min at low p95 latency (validated by load tests)

- Fewer regressions via contract discipline and versioned releases

- Higher delivery velocity with previews, checks, and standardized tooling

- Faster incident response (Datadog + runbooks → lower MTTR)

- CTR/AOV lift from personalized recommendations, contributing to revenue growth

Collaboration

- Partnered with Product to scope features and clarify trade-offs.

- Worked with Design on usable, consistent internal tooling.

- Co-led CI/CD with DevOps: standardized GitHub Actions/Terraform workflows and Kubernetes/Helm deploys; aligned on observability, alerts, and runbooks.

- Collaborated with Operations to map real workflows, add feature flags.

- Performed peer reviews and mentored engineers on federation patterns, testing, security, and observability best practices.

What I’d do next

- Add contract tests between services to catch schema drift earlier

- Scenario-based load tests tied to growth projections

- Expand recs with a feature store and clearer offline evaluation